Google Search Console (GSC) is an indispensable tool for webmasters and SEO professionals. It provides insights into how your website performs in Google Search and identifies critical issues that may hinder the website’s visibility. From page indexing errors to page experience and security & manual actions, GSC equips users with accurate data to optimise their site’s performance.

The importance of Google Search Console in SEO cannot be overstated. GSC allows website owners to monitor their search traffic, resolve technical SEO problems, and enhance their site’s ranking potential. Common errors include indexing issues, sitemap problems, and user experience errors—all of which can impact a site’s crawlability and overall SEO health.

Table of Contents

What Are Google Search Console Errors?

Google Search Console errors represent issues the tool identifies that may impact a website’s search visibility and user experience. These errors signal problems that require attention to maintain optimal performance and ensure compliance with search engine standards.

What are the common types of Google Search Console Errors?

The common types of Google Search Console errors are listed below.

- Page Indexing Errors: Problems preventing Google from properly indexing your website pages. Examples include blocked URLs, missing canonical tags, and soft 404 errors.

- Sitemap Errors: Issues related to the XML sitemap, such as unreadable files, excessive URLs, or fetch errors.

- Page Experience Errors: Concerns affecting the user experience, including Core Web Vitals metrics like Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS).

- Search Enhancement Errors: Problems with structured data, breadcrumbs, AMP, and other features that enhance search results visibility.

- Security and Manual Actions Errors: Security-related issues like malware, phishing, and manual actions for violations of Google’s guidelines, such as cloaking or unnatural links.

How to Identify Errors in Google Search Console?

To identify errors in Google Search Console, follow these steps:

- Log in to Google Search Console: Access your GSC account using your verified property.

- Review the “Overview” Page: This section summarises critical issues across indexing, enhancements, and security.

- Access the Indexing Report:

- Navigate to the Indexing section to view page indexing issues such as errors, warnings, and excluded URLs.

- Inspect details for each category to understand the cause and impact.

- Inspect the Enhancements Report:

- Check for errors related to breadcrumbs and structured data.

- Use the Rich Results Test to validate structured data implementations.

- Examine Core Web Vitals:

- Go to the Page Experience report for Core Web Vitals metrics.

- Identify issues like slow Largest Contentful Paint (LCP) or high Cumulative Layout Shift (CLS).

- Inspect URLs Individually:

- Use the URL Inspection Tool to check specific pages for crawling, indexing, and rendering details.

- Monitor Security Issues:

- Visit the Security and Manual Actions section to detect malware, phishing, or manual penalties.

- Check Sitemaps:

- Open the Sitemaps section to ensure your submitted sitemap is readable and error-free.

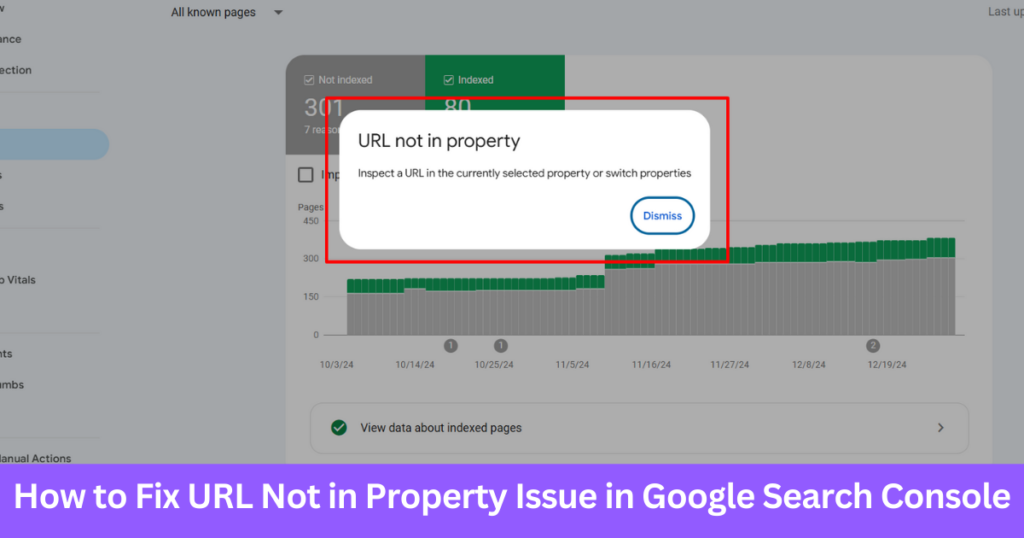

How to Fix the URL Not in Property Issue in Google Search Console?

“URL not in property” is an issue that arises when a specific URL is not part of the verified property in the Google Search Console. This can prevent you from accessing critical insights and resolving related issues.

Steps to Fix the URL not in the property Issue:

- Verify the Property:

- Go to Google Search Console and navigate to the Settings section.

- Use the Ownership Verification option to ensure the domain or URL prefix is verified.

- Verification methods include adding a DNS record, uploading an HTML file, or using a meta tag.

- Ensure Correct Property Type:

- Use a Domain Property to include all subdomains and protocols (e.g., HTTPS and HTTP).

- Alternatively, verify the specific URL Prefix if you only need insights for a particular subfolder or URL.

- Inspect the URL:

- Use the URL Inspection Tool to check the status of the URL.

- If the URL is outside the verified property, adjust the property to include it.

- Validate Changes:

- After updating or verifying the property, recheck the URL using the URL Inspection Tool.

- Ensure that the URL is accessible and correctly associated with the verified property.

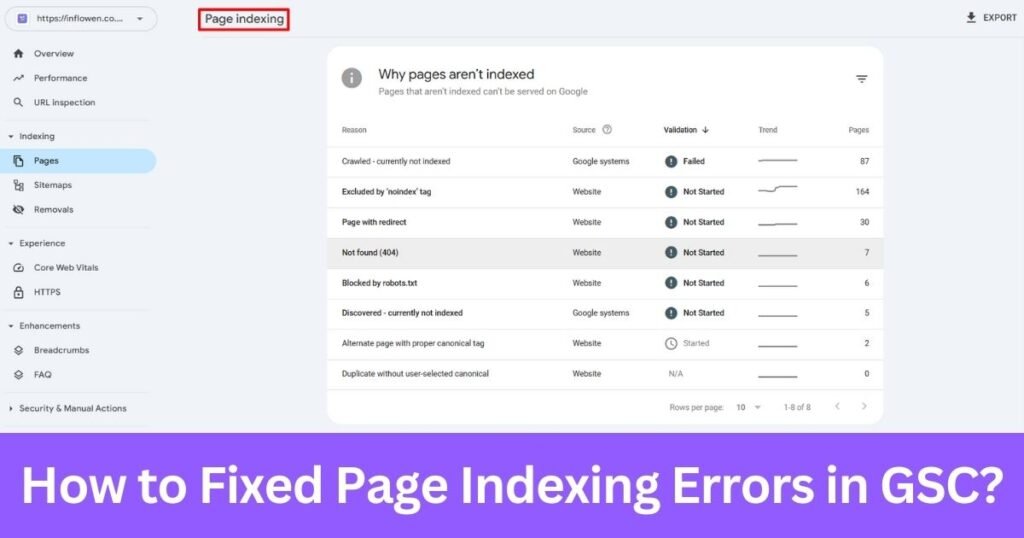

How to Fix Page Indexing Errors in GSC?

Page Indexing errors occur when Google is unable to properly index a page, resulting in it not appearing in search results as expected. These issues can arise due to technical, content-related, or configuration problems that hinder Google’s ability to crawl and index a page effectively.

Crawled – Currently Not Indexed

Crawled – currently not indexed refers to an issue where Google has crawled the page but has chosen not to index it. This can occur due to low-quality content, duplication, or other factors signaling to Google that the page is not valuable enough for its index.

Steps to Fix the Issue:

- Improve Content Quality:

- Ensure the page provides unique, comprehensive, and valuable information.

- Avoid thin content and ensure the content addresses user intent effectively.

- Check for Duplicate Content:

- Use tools like Copyscape to identify and resolve duplication issues.

- Implement canonical tags to point to the primary version of the content.

- Verify Internal Linking:

- Add internal links from high-authority pages within your site to the affected page.

- Ensure anchor texts are relevant and descriptive.

- Optimise Metadata:

- Create a compelling meta title and description that align with search intent.

- Avoid over-optimisation or keyword stuffing.

- Review Crawl Budget:

- Ensure your website’s crawl budget is not exhausted on less important pages.

- Use the robots.txt file wisely to block unnecessary pages from being crawled.

- Resubmit the URL:

- Use the URL Inspection Tool in GSC to request indexing.

- Monitor the status over time to confirm if the issue has been resolved.

Excluded by ‘noindex’ Tag

Excluded by ‘noindex’ tag occurs when a page is intentionally marked with a noindex directive, signalling to search engines not to index the page. This tag is often used for pages that are not intended for public search results, such as internal resources or test pages.

Steps to Fix the Issue:

- Verify the Intent of the noindex Tag:

- Determine if the noindex tag is applied intentionally. For internal or temporary pages, the tag might be appropriate.

- If the page should be indexed, remove the noindex directive.

- Update Robots Meta Tag:

- Edit the page’s HTML to remove the <meta name=”robots” content=”noindex”> directive.

- Ensure the updated directive allows indexing, e.g., <meta name=”robots” content=”index, follow”>.

- Check Header Responses:

- Verify if the x-robots-tag HTTP header includes noindex.

- Remove or update the header if necessary to allow indexing.

- Resubmit the URL:

- Use the URL Inspection Tool to validate the changes and request reindexing.

- Monitor the page’s status in GSC to ensure it is indexed correctly.

- Inspect CMS Settings:

- Review content management system (CMS) configurations to ensure there are no default noindex settings applied to the page.

Page with Redirect

Page with redirect refers to a URL that is set to forward users to another page via a redirection. While redirects are common and often necessary for maintaining a seamless user experience, improper or excessive use of redirects can lead to indexing issues in the Google Search Console.

Steps to Fix the Issue:

- Inspect Redirect Chains:

- Identify if the affected URL is part of a redirect chain (e.g., A → B → C).

- Simplify the chain by redirecting directly to the final destination (e.g., A → C).

- Fix Redirect Loops:

- Check for loops where a URL redirects back to itself or creates an endless cycle.

- Correct the redirect configuration to ensure a clear, one-way flow.

- Use Proper Redirect Types:

- Implement 301 redirects for permanent changes and 302 redirects for temporary changes.

- Avoid using JavaScript or meta-refresh redirects as they can confuse search engines.

- Update Internal Links:

- Replace outdated internal links pointing to redirected URLs with direct links to the target page.

- Validate Using GSC:

- Use the URL Inspection Tool to verify the redirect is functioning correctly.

- Ensure the target page is indexable and not blocked by robots.txt or a noindex directive.

- Monitor Redirects:

- Regularly audit redirects to ensure they remain necessary and effective.

Not Found (404)

Not found (404) refers to an issue where the requested URL cannot be found on the server. This error commonly arises when a page is deleted, the URL is incorrect, or broken links are pointing to non-existent pages. It can negatively impact user experience and search engine optimisation.

Steps to Fix the Issue:

- Restore the Page:

- If the page was removed unintentionally, recreate the content and ensure it aligns with user intent.

- Implement 301 Redirects:

- Redirect the broken URL to a relevant alternative or updated page using a 301 redirect.

- This ensures users and search engines are directed to the correct content.

- Update Internal Links:

- Identify and correct internal links pointing to the 404 page.

- Replace these links with updated URLs or remove them if the content is obsolete.

- Audit External Links:

- Use tools like Ahrefs or Semrush to find broken backlinks pointing to the 404 page.

- Contact the linking sites and request updates to the correct URL.

- Remove Invalid Links from Sitemap:

- Ensure the sitemap does not include URLs leading to 404 errors.

- Update and resubmit the sitemap in Google Search Console.

- Monitor Using Google Search Console:

- Regularly check the Coverage report in GSC to identify new 404 errors.

- Use the URL Inspection Tool to verify fixes and request reindexing.

- Customise the 404 Page:

- Create a user-friendly 404 page with helpful navigation links or a search bar to guide users to relevant content.

Blocked by robots.txt

Blocked by robots.txt refers to a situation where a webpage is restricted from being crawled by search engine bots due to directives specified in the robots.txt file. This issue can prevent important pages from being indexed, leading to reduced visibility in search results.

Steps to Fix the Issue:

- Identify Blocked Pages:

- Use the Indexing report in Google Search Console to pinpoint pages blocked by robots.txt.

- Cross-check the URLs with your robots.txt file.

- Review robots.txt Directives:

- Locate the robots.txt file in your website’s root directory.

- Check for rules that disallow crawling of essential pages (e.g., Disallow: /example-page/).

- Allow Access to Important Pages:

- Update the robots.txt file to remove disallow rules for critical pages. For example:

User-agent: * Allow: /important-page/

- Update the robots.txt file to remove disallow rules for critical pages. For example:

- Test Changes:

- Use the robots.txt Tester tool in Google Search Console to validate the updated rules.

- Ensure the changes allow search engine bots to crawl the intended pages.

- Submit Updated robots.txt:

- Save the revised robots.txt file in your website’s root directory.

- Use the robots.txt Tester in GSC to resubmit the updated file.

- Verify Crawling Status:

- Monitor the affected pages using the URL Inspection Tool in GSC.

- Check if the pages are now accessible and indexed by search engines.

- Implement Best Practices:

- Avoid blocking important pages such as landing pages or blog posts.

- Restrict access only to non-critical pages, such as admin sections or test environments.

Discovered – Currently Not Indexed

Discovered – currently not indexed occurs when Google has identified a URL but has not yet crawled it. This issue may arise due to a limited crawl budget, low page priority, or technical restrictions preventing the page from being fetched.

Steps to Fix the Issue:

- Ensure the Page is Crawlable:

- Verify that the page is not blocked by robots.txt or a noindex directive.

- Remove any restrictions preventing Googlebot from accessing the URL.

- Optimise Internal Linking:

- Add internal links to the page from high-authority sections of your site.

- Use descriptive anchor texts that signal relevance.

- Submit the URL Manually:

- Use the URL Inspection Tool in GSC to request crawling of the page.

- Ensure the page is included in your XML sitemap for easier discovery.

- Review Crawl Budget:

- Check your server’s performance and ensure it can handle increased crawling.

- Prioritise important pages in your sitemap to allocate crawl budget effectively.

- Improve Page Priority:

- Enhance the content quality and relevance to signal its importance to Google.

- Avoid creating thin or duplicate pages that reduce overall site quality.

- Monitor GSC Reports:

- Regularly review the Coverage report to track the status of discovered URLs.

- Address new issues promptly as they appear.

Alternate Page with Proper Canonical Tag

Alternate page with a proper canonical tag refers to a scenario where a page is recognised as a duplicate but is correctly marked with a canonical tag pointing to the preferred URL. While this setup is intentional, issues can arise if the canonical tag is improperly implemented or if the preferred page is not indexed.

Steps to Fix the Issue:

- Verify the Canonical Tag:

- Inspect the source code of the alternate page to ensure the canonical tag is correctly pointing to the preferred URL.

- Use Google Search Console or browser extensions to validate canonical tag implementation.

- Check the Preferred URL:

- Ensure the URL specified in the canonical tag is live, crawlable, and not blocked by robots.txt or a noindex directive.

- Confirm that the preferred page has valuable, unique content that warrants its selection as the canonical version.

- Validate Internal Links:

- Ensure internal links across your website point directly to the preferred URL rather than alternate pages.

- Update any outdated links that may cause confusion for search engines.

- Review the Sitemap:

- Include only the preferred URLs in your XML sitemap.

- Avoid listing alternate pages with canonical tags unless absolutely necessary.

- Use the URL Inspection Tool:

- In Google Search Console, inspect both the alternate and preferred URLs.

- Check if the canonical tag is correctly recognised and if the preferred page is indexed.

- Improve the Preferred Page:

- Optimise the content of the preferred URL to ensure it provides comprehensive value and relevance.

- Avoid duplicating content across alternate pages without meaningful differentiation.

- Monitor Changes:

- Regularly check the Coverage report in Google Search Console to identify any recurring issues with alternate pages.

- Address new problems promptly to maintain consistent indexing and ranking.

Duplicate Without User-Selected Canonical

Duplicate without user-selected canonical refers to a scenario where multiple versions of a page exist, and none is explicitly identified as the preferred one for indexing. This can confuse search engines, leading to split ranking signals and reduced visibility.

Steps to Fix the Issue:

- Identify Duplicate Pages:

- Use Google Search Console to locate duplicate pages.

- Check for pages with similar content or slight URL variations (e.g., with and without query parameters).

- Implement Canonical Tags:

- Add a <link rel=”canonical” href=”preferred-URL”> tag in the <head> section of the duplicate pages.

- Ensure the canonical tag points to the main version of the page.

- Update the Sitemap:

- Include only the canonical (preferred) URLs in your XML sitemap.

- Remove duplicate URLs to prevent confusion.

- Check Internal Links:

- Update internal links to point directly to the canonical page instead of duplicates.

- Ensure anchor texts are relevant and consistent.

- Test with Google Search Console:

- Use the URL Inspection to confirm that the canonical tag is recognised by Google.

- Submit the canonical URL for re-indexing.

- Monitor Analytics:

- Track traffic and ranking metrics for the canonical and duplicate pages.

- Ensure signals are consolidated on the preferred page over time.

- Use 301 Redirects (Optional):

- If duplicates are unnecessary, set up 301 redirects from duplicate pages to the canonical URL.

- This helps preserve link equity and enhances user experience.

Server Error (5xx)

Server Error (5xx) occurs when the server hosting the website fails to fulfil a request made by the user or search engine bots. These errors indicate issues such as temporary server unavailability, misconfigurations, or overloading, which can impact website accessibility and SEO performance.

Steps to Fix the Issue:

- Identify the Specific Error Code:

- Use server logs or Google Search Console to determine the exact type of 5xx error (e.g., 500 Internal Server Error, 502 Bad Gateway, 503 Service Unavailable).

- Check Server Resources:

- Ensure your server has sufficient resources (CPU, memory, bandwidth) to handle incoming requests.

- Upgrade your hosting plan if necessary.

- Fix Configuration Issues:

- Review server configuration files (e.g., .htaccess, nginx.conf, httpd.conf) for errors or misconfigurations.

- Test configuration changes to ensure they resolve the issue.

- Inspect Application Errors:

- Check website applications, plugins, or scripts for bugs or compatibility issues.

- Update or disable problematic extensions that may cause server crashes.

- Resolve Database Issues:

- Verify database connections and queries to ensure they are functioning correctly.

- Repair corrupted databases and optimise queries to reduce server load.

- Monitor Traffic Spikes:

- Use monitoring tools to detect unusual traffic patterns that may overload the server.

- Implement caching mechanisms (e.g., CDN, server-side caching) to reduce server strain.

- Implement Failover Solutions:

- Set up a backup server or load balancer to handle traffic during primary server downtimes.

- Use a Content Delivery Network (CDN) to distribute server load.

- Test and Validate Fixes:

- Use Google’s URL Inspection Tool or third-party error checkers to confirm the server error is resolved.

- Regularly monitor server performance to prevent recurring issues.

Soft 404

Soft 404 refers to a situation where a webpage returns a “200 OK” status code but displays content indicating the page is not available (such as a “Page Not Found” message). This can confuse search engines and negatively impact user experience and indexing.

To fix the issue follow these steps:

- Verify the Issue:

- Use the URL Inspection Tool in Google Search Console to confirm the page is flagged as a soft 404.

- Check if the page’s content accurately represents its status.

- Restore Missing Pages:

- If the page was removed unintentionally, recreate the original content and ensure it provides value to users.

- Update any internal or external links pointing to the missing page.

- Implement Proper HTTP Status Codes:

- For permanently removed pages, return a “404 Not Found” or “410 Gone” status code.

- For temporary unavailability, use a “503 Service Unavailable” status code.

- Redirect Appropriately:

- Set up 301 redirects to direct users and search engines to a relevant page if the original content is no longer available.

- Avoid redirecting all soft 404 pages to the homepage, as this can confuse users and search engines.

- Improve Content Quality:

- Ensure the page provides unique and relevant content that satisfies user intent.

- Avoid thin or low-value content that may trigger a soft 404 classification.

- Update the Sitemap:

- Remove soft 404 pages from your XML sitemap to prevent search engines from repeatedly crawling them.

- Ensure the sitemap only includes live, valuable URLs.

- Monitor for Recurrence:

- Regularly check the Coverage report in Google Search Console to identify new soft 404 issues.

- Address them promptly to maintain a healthy site structure.

Blocked Due to Unauthorized Request (401)

Blocked Due to Unauthorized Request (401) occurs when a webpage requires authentication, but the search engine bot or user trying to access it does not have the necessary credentials. This can lead to indexing issues and restricted access to content.

Steps to Fix the Issue:

- Identify Affected Pages:

- Use the Coverage report in Google Search Console to locate URLs returning a 401 error.

- Verify the authentication requirements for these pages.

- Adjust Authentication Settings:

- Remove authentication requirements for pages meant to be publicly accessible.

- If authentication is necessary, ensure restricted pages are excluded from indexing using noindex directives.

- Validate Access for Googlebot:

- Add Googlebot to your authentication system if appropriate, allowing it to crawl protected content.

- Ensure Googlebot has access without compromising site security.

- Update robots.txt and Meta Tags:

- Check the robots.txt file to ensure it does not block Googlebot from accessing essential pages.

- Use meta tags like <meta name=”robots” content=”noindex”> for pages that should not appear in search results.

- Check CMS or Hosting Configuration:

- Ensure your content management system (CMS) or server does not require unnecessary authentication for public pages.

- Adjust settings to align with SEO goals.

- Use the URL Inspection Tool:

- Test affected URLs in Google Search Console’s URL Inspection Tool to confirm access issues are resolved.

- Submit the fixed URL for reindexing.

- Monitor and Reassess:

- Regularly review GSC reports to identify new instances of 401 errors.

- Implement periodic audits to prevent recurring issues.

Blocked Due to Access Forbidden (403)

Blocked Due to Access Forbidden (403) occurs when a server denies access to a requested resource due to insufficient permissions. This issue prevents Googlebot and users from accessing the page, potentially impacting its visibility and indexing in search engines.

Steps to Fix the Issue:

- Identify the Affected URLs:

- Use the Indexing report in Google Search Console to locate pages returning a 403 error.

- Verify the intended access restrictions for these pages.

- Check File Permissions:

- Review and adjust file and directory permissions on the server to ensure proper access.

- Set permissions to 644 for files and 755 for directories, unless stricter security is required.

- Verify Server Configuration:

- Check your server’s configuration files (e.g., .htaccess, nginx.conf, httpd.conf) for rules that may block access.

- Update or remove restrictive directives causing unintended blocks.

- Whitelist Googlebot:

- Ensure that Googlebot’s IP range is not blocked by server security rules or firewalls.

- Add Googlebot to any relevant whitelists.

- Inspect Access Control Rules:

- Check for IP bans, geolocation restrictions, or user-agent blocks that may prevent access.

- Remove unnecessary restrictions for pages meant to be publicly accessible.

- Use the URL Inspection Tool:

- Test the affected URLs in Google Search Console to confirm resolution of the 403 error.

- Request reindexing of fixed URLs.

- Update robots.txt File:

- Ensure the robots.txt file does not inadvertently block Googlebot or essential pages.

- Use directives like Allow to permit access where necessary.

- Monitor Logs and GSC Reports:

- Regularly review server logs and Google Search Console reports to identify new instances of 403 errors.

- Address recurring issues promptly to maintain access and indexing.

URL Blocked Due to Other 4xx Issue

URL Blocked Due to Other 4xx Issue refers to cases where a URL returns a 4xx client error other than 404 or 403, such as 400 (Bad Request), 405 (Method Not Allowed), or 429 (Too Many Requests). These errors indicate issues that prevent users and search engines from accessing the content.

Steps to Fix the Issue:

- Identify the Specific 4xx Error:

- Use Google Search Console’s page indexing report to locate URLs affected by 4xx errors.

- Check server logs to determine the exact error code and its cause.

- Review Server Configuration:

- Check your server settings to ensure proper handling of HTTP requests.

- Adjust configurations to resolve errors like 400 (e.g., invalid URL syntax) or 405 (unsupported HTTP methods).

- Inspect Application Code:

- If the issue is caused by application-level restrictions, review and update the code to allow valid requests.

- Fix bugs or incompatibilities that may trigger these errors.

- Monitor Traffic Patterns:

- For 429 (Too Many Requests), implement rate limiting correctly to prevent overloading without blocking legitimate traffic.

- Use tools like Cloudflare or a CDN to manage traffic effectively.

- Validate URLs:

- Ensure that all URLs in your sitemap and internal links are valid and correctly formatted.

- Update or remove invalid URLs to prevent repeated 4xx errors.

- Provide Appropriate HTTP Responses:

- For permanently removed pages, use 404 or 410 status codes to signal their removal.

- For temporary issues, use 503 (Service Unavailable) to indicate a temporary unavailability.

- Test with URL Inspection Tool:

- Use the URL Inspection Tool in Google Search Console to check affected URLs after implementing fixes.

- Request indexing to verify that the issue is resolved.

- Monitor and Audit Regularly:

- Continuously review your website’s performance and error reports in GSC.

- Perform regular audits to identify and fix new instances of 4xx issues.

Blocked by ‘Page Removal Tool’

Blocked by ‘Page Removal Tool’ refers to a scenario where a URL has been manually requested for removal from Google’s index using the Google Search Console’s removal tool. This issue can prevent essential pages from appearing in search results if the removal request was unintentional or outdated.

Steps to Fix the Issue:

- Review the Removal Request:

- Log in to Google Search Console and navigate to the Removals tool.

- Check for active removal requests that may still be in effect.

- Cancel Unnecessary Removals:

- Identify URLs that were removed unintentionally or are no longer relevant for removal.

- Cancel the request by selecting the affected URL and opting to revoke the removal.

- Inspect the URL Status:

- Use the URL Inspection Tool to confirm whether the page is accessible and not restricted by other means (e.g., robots.txt or noindex tags).

- Check for any errors or warnings related to the URL.

- Update and Resubmit URLs:

- Ensure the content of the affected page is valuable and aligns with user intent.

- Resubmit the URL for indexing using the URL Inspection Tool.

- Monitor the Coverage Report:

- Review the Coverage report in Google Search Console to confirm that the page is indexed and no longer blocked.

- Address any additional warnings or exclusions highlighted in the report.

- Implement Preventative Measures:

- Restrict access to the Page Removal Tool within your organisation to avoid accidental removals.

- Regularly audit removal requests to ensure they align with your SEO and content strategy.

Indexed, Though Blocked by robots.txt

Indexed, Though Blocked by robots.txt refers to situations where a URL is included in Google’s index despite being restricted by a robots.txt file. This typically happens when Google is aware of the URL from external links but cannot access its content due to crawling restrictions.

Actionable Steps to Fix the Issue:

- Review robots.txt Directives:

- Access your robots.txt file and check for disallow rules that block the affected URL.

- Confirm whether the blocking is intentional or accidental.

- Evaluate Indexing Intent:

- Determine if the page is meant to appear in search results.

- If the page should not be indexed, ensure it is excluded using a noindex meta tag instead of relying solely on robots.txt.

- Update robots.txt Appropriately:

- If the page should be accessible, remove or modify the disallow directive in the robots.txt file.

Example of allowing access: User-agent: * Allow: /example-page/

- If the page should be accessible, remove or modify the disallow directive in the robots.txt file.

- Use the URL Inspection Tool:

- Inspect the affected URL in Google Search Console to confirm its current status.

- Resubmit the URL for crawling after updating the robots.txt file.

- Apply Meta Tags for Better Control:

- Add a <meta name=”robots” content=”noindex”> tag if the page should not be indexed but still needs to be crawled.

- Use <meta name=”robots” content=”index, follow”> for pages you want indexed and accessible.

- Monitor Coverage Reports:

- Regularly check the Coverage report in GSC to ensure no URLs remain indexed against your intent.

- Address new occurrences promptly to maintain consistency.

- Validate Changes:

- Use the robots.txt Tester in Google Search Console to verify that the updated directives work as intended.

- Confirm that Googlebot can or cannot access the page, depending on your desired outcome.

Page Indexed Without Content

Page Indexed Without Content refers to instances where a URL is included in Google’s index but lacks significant or visible content when accessed. This can happen due to technical issues, incorrect configurations, or placeholder pages that offer no value to users or search engines.

Steps to Fix the Issue:

- Inspect the Affected Page:

- Use the URL Inspection Tool in Google Search Console to check the page’s current status and identify potential issues.

- Verify if the content is rendering correctly and accessible to Googlebot.

- Check for Rendering Problems:

- Test the page using tools like URL Inspection Tool to ensure proper rendering.

- Address any JavaScript or CSS issues that might block content loading.

- Ensure Content Availability:

- Confirm that the page has valuable, relevant content visible to both users and search engines.

- Avoid publishing placeholder pages or thin content.

- Review Canonical Tags:

- Verify that canonical tags are correctly implemented and not pointing to an empty or incorrect URL.

- Update the canonical tag to reference the correct, content-rich page if necessary.

- Check Metadata and Headers:

- Ensure the page’s metadata (title, description) accurately reflects the page content.

- Avoid using misleading or irrelevant meta descriptions.

- Address Indexing Issues:

- If the page should not be indexed, add a noindex tag or remove it from the sitemap.

- For essential pages, request reindexing after fixing the content.

- Test Internal Linking:

- Verify that internal links pointing to the page are working correctly and provide context about the page’s purpose.

- Update or remove broken or irrelevant links.

- Monitor Performance:

- Regularly check the Coverage report in Google Search Console to identify similar issues in the future.

- Address new problems promptly to maintain content visibility.

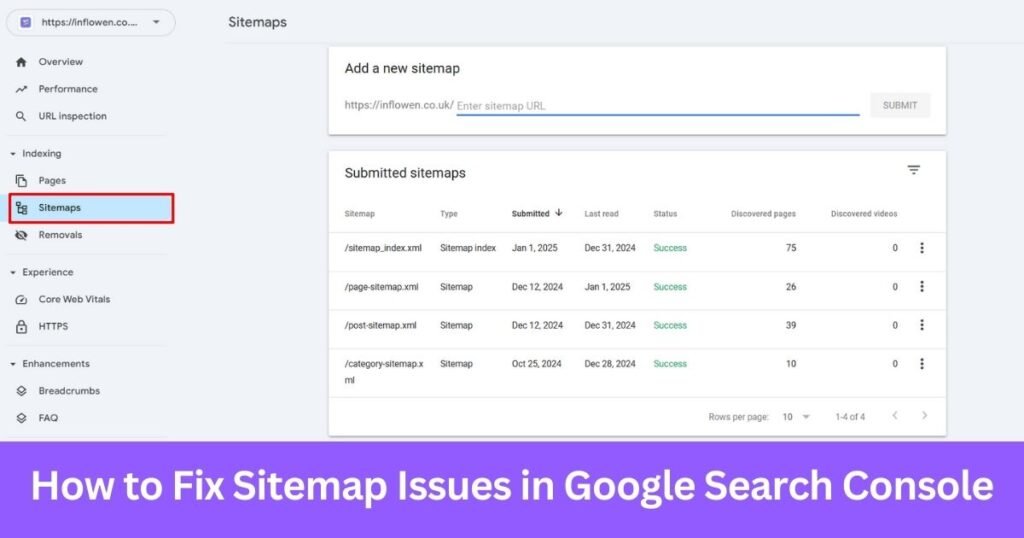

How to Fix Sitemap Issues in Google Search Console?

Sitemap issues in Google Search Console occur when there are problems with the XML sitemap submitted to Google. These issues can hinder search engines from efficiently discovering and crawling a website’s content. Common problems include unreadable files, excessive URLs, and errors preventing Google from fetching the sitemap.

Sitemap issues can disrupt the indexing process, reducing a website’s search engine visibility. Resolving these issues ensures an accurate representation of a website’s structure for better crawling efficiency.

Sitemap Couldn’t Be Read

Sitemap Couldn’t Be Read refers to an issue where Google is unable to process the submitted sitemap file. This could be due to syntax errors, inaccessible URLs, or server-related issues preventing the sitemap from being fetched.

Steps to Fix the Issue:

- Validate the Sitemap File:

- Use a sitemap validator tool to check for syntax errors or invalid entries in the XML file.

- Ensure the file complies with Google’s sitemap guidelines.

- Check Server Accessibility:

- Confirm that the sitemap file is hosted on a publicly accessible server.

- Test the sitemap URL in a browser to ensure it loads without errors.

- Resolve Fetch Errors:

- Use the robots.txt Tester in Google Search Console to confirm that the sitemap URL is not blocked.

- Check for server response codes (e.g., 200 OK) when accessing the sitemap.

- Simplify the Sitemap Structure:

- Remove unnecessary URLs or split large sitemaps into smaller ones to improve readability.

- Use a sitemap index file if you have multiple sitemaps.

- Resubmit the Sitemap:

- After resolving issues, resubmit the sitemap in the Sitemaps section of Google Search Console.

- Monitor the status to ensure the file is successfully read.

- Monitor for Updates:

- Regularly check the Sitemaps report for any new errors.

- Keep the sitemap updated with accurate and current URLs.

Couldn’t Fetch Error

Couldn’t Fetch Error occurs when Google Search Console is unable to retrieve the submitted sitemap. This issue may result from connectivity problems, server misconfigurations, or incorrect sitemap URLs.

Steps to Fix the Issue:

- Verify the Sitemap URL:

- Ensure the submitted sitemap URL is correct and accessible.

- Test the URL in a browser to confirm it loads without errors.

- Check Server Response:

- Use tools like online HTTP status checkers to verify the server response for the sitemap URL.

- Ensure the server returns a 200 OK status code.

- Inspect Robots.txt Restrictions:

- Verify that the sitemap URL is not blocked by your robots.txt file.

- Use the robots.txt Tester in Google Search Console to validate accessibility.

- Resolve Server Issues:

- Check server logs for errors or misconfigurations that may prevent Googlebot from accessing the sitemap.

- Ensure the server is properly configured to handle requests from Googlebot.

- Test with Another Tool:

- Use third-party tools like XML Sitemap Validators to test sitemap accessibility.

- Identify and fix issues highlighted by these tools.

- Resubmit the Sitemap:

- After resolving the issue, resubmit the sitemap in the Sitemaps section of Google Search Console.

- Monitor the status to confirm successful retrieval by Google.

- Monitor and Audit Regularly:

- Continuously check the Sitemaps report for new “Couldn’t Fetch” errors.

- Address any emerging issues promptly to ensure uninterrupted indexing.

Too Many URLs in a Sitemap

Too Many URLs in a Sitemap refers to a situation where the XML sitemap exceeds Google’s limit of 50,000 URLs or 50MB uncompressed file size. This issue arises when large websites include extensive URLs in a single sitemap file, exceeding the permissible limits and causing inefficiencies in crawling.

Steps to Fix the Issue:

- Split the Sitemap into Smaller Files:

- Divide the sitemap into multiple smaller sitemaps, each containing fewer than 50,000 URLs.

- Use a sitemap index file to reference all smaller sitemaps for better organisation.

- Prioritise Important URLs:

- Include only high-priority pages in the sitemap, such as key landing pages, category pages, and evergreen content.

- Exclude low-value or duplicate pages to optimise the sitemap’s utility.

- Compress the Sitemap:

- Compress the sitemap file using gzip to reduce its size.

- Ensure the compressed file does not exceed the 50MB limit.

- Validate the Sitemap:

- Use an XML sitemap validator tool to ensure all smaller sitemaps are error-free and adhere to Google’s guidelines.

- Check for syntax errors and broken URLs.

- Submit the Sitemap Index:

- Submit the sitemap index file in the Sitemaps section of Google Search Console.

- Monitor the status to ensure all smaller sitemaps are successfully processed.

- Regularly Update the Sitemap:

- Keep the sitemap updated to reflect changes in your website structure.

- Remove outdated or irrelevant URLs periodically.

- Monitor GSC Reports:

- Continuously review the Sitemaps report in Google Search Console for new issues.

- Address any emerging problems promptly to maintain efficiency.

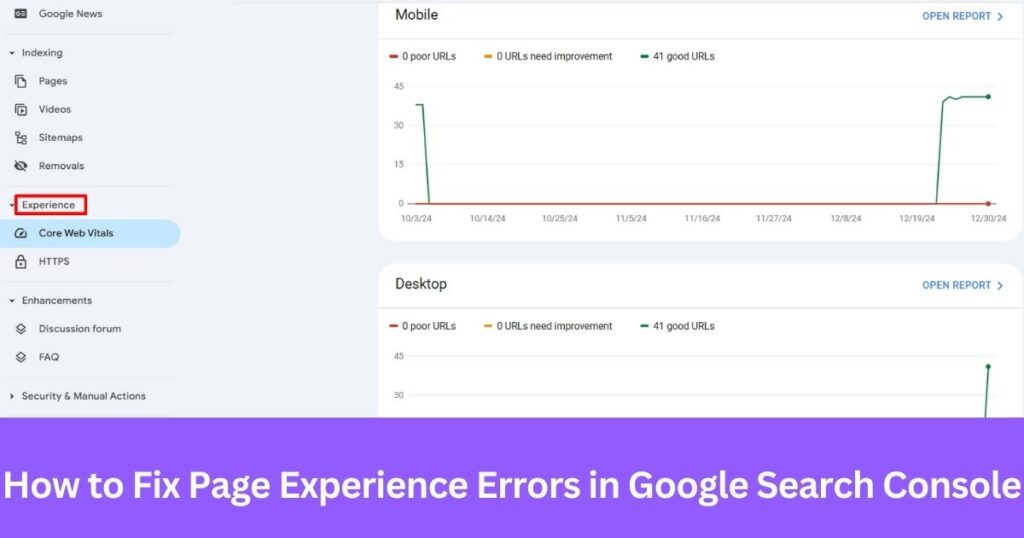

How to Fix Page Experience Errors in Google Search Console?

Page experience error in Google Search Console refers to issues affecting the overall user experience on a website. These errors can impact rankings as they relate to essential metrics like Core Web Vitals and HTTPS. A poor page experience can result in lower user engagement and reduced visibility in search results.

Core Web Vitals Issues

Core Web Vitals issues in Google Search Console refer to problems with essential metrics that measure the quality of user experience on a webpage. These metrics—Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS)—are designed to evaluate a site’s loading performance, interactivity, and visual stability. Core Web Vital issues typically originate from slow servers, poorly optimised assets, or unstable layouts, which can significantly impact user satisfaction and search rankings.

Largest Contentful Paint (LCP)

Largest Contentful Paint (LCP) measures the time it takes for the largest visible content element (e.g., an image, text block, or video) to load and become visible to the user. This metric directly impacts user perception of page speed and can originate from unoptimised resources, server delays, or large file sizes.

Steps to Fix the Issue:

- Optimise Images:

- Compress images using tools like TinyPNG.

- Serve images in modern formats such as WebP for better compression.

- Use responsive image techniques to ensure appropriate sizing for different devices.

- Minimise Render-Blocking Resources:

- Defer the loading of non-critical JavaScript files using the async or defer attributes.

- Combine and minify CSS files to reduce load times.

- Use inline critical CSS to prioritise above-the-fold content rendering.

- Enhance Server Response Time:

- Upgrade to a faster hosting provider or server configuration.

- Use a Content Delivery Network (CDN) to distribute content closer to users.

- Implement server-side caching to reduce load times for repeat visitors.

- Enable Lazy Loading:

- Implement lazy loading for offscreen images and videos to prioritise visible content.

- Use the loading=”lazy” attribute for images and iframes.

- Preload Key Resources:

- Use the preload attribute to prioritise loading of critical assets like fonts and hero images.

- Ensure preloaded assets are essential and improve rendering speed.

- Reduce CSS and JavaScript Complexity:

- Audit unused CSS and JavaScript and remove redundant code.

- Use tools like PurifyCSS to eliminate unnecessary assets.

- Test and Validate Improvements:

- Use Google’s PageSpeed Insights or Lighthouse tools to test LCP performance.

- Monitor improvements and resolve any residual issues.

First Input Delay (FID)

First Input Delay (FID) measures the time it takes for a web page to respond to a user’s first interaction, such as clicking a button, tapping a link, or entering text. FID reflects the website’s interactivity and responsiveness. High FID values are often caused by heavy JavaScript execution, inefficient event handling, or unoptimised third-party scripts, which can delay the browser’s ability to respond to user actions.

Steps to Fix the Issue:

- Minimise JavaScript Execution:

- Defer or remove unnecessary JavaScript files.

- Split long tasks into smaller, asynchronous operations to avoid blocking the main thread.

- Optimise third-party scripts by loading them asynchronously.

- Optimise Event Listeners:

- Reduce the number of event listeners by consolidating them where possible.

- Use passive event listeners for better performance.

- Implement Browser Caching:

- Cache frequently used resources to reduce the load time for repeat visits.

- Use a Content Delivery Network (CDN) to serve assets faster.

- Optimise Web Fonts:

- Use font-display: swap to avoid delays caused by web font loading.

- Preload critical fonts to ensure they are available immediately when needed.

- Reduce Third-Party Dependencies:

- Limit the number of third-party scripts that can slow down page responsiveness.

- Use performance monitoring tools to identify and remove inefficient scripts.

- Improve Server Response Time:

- Use a faster hosting service or upgrade server infrastructure.

- Implement server-side optimisations such as caching and database query improvements.

- Test and Validate Fixes:

- Use tools like Google’s Lighthouse to measure FID performance.

- Continuously monitor the Core Web Vitals report in Google Search Console to ensure FID improvements.

Cumulative Layout Shift (CLS)

Cumulative Layout Shift (CLS) measures the visual stability of a webpage by tracking how often and how much visible elements move unexpectedly during loading. High CLS values can occur due to unreserved space for images, ads, or dynamic content, leading to a poor user experience and increased frustration.

Steps to Fix the Issue:

- Specify Dimensions for Images and Videos:

- Always include width and height attributes for images and videos.

- Use CSS aspect ratio boxes to reserve space for media elements before they load.

- Reserve Space for Ads:

- Allocate specific areas for ads using placeholder elements.

- Avoid inserting ads dynamically above existing content.

- Optimise Fonts:

- Use font-display: swap to prevent invisible text while web fonts load.

- Preload essential fonts to minimise layout shifts caused by font changes.

- Avoid Injecting Dynamic Content Above Existing Content:

- Ensure dynamic elements, such as banners or notifications, are added below existing content.

- Use animations or transitions to introduce content changes smoothly.

- Use Static Containers for Embeds:

- Assign static dimensions to iframe or embedded content, such as videos or maps.

- Preload the layout to prevent unexpected shifts during loading.

- Test and Monitor with Tools:

- Use Google’s Lighthouse to analyse and improve CLS scores.

- Continuously monitor the Core Web Vitals report in Google Search Console to track improvements.

HTTPS Issue

HTTPS issue refers to problems with the secure protocol implementation on a website. HTTPS ensures encrypted communication between the user’s browser and the server, enhancing security and trust. Issues like invalid SSL certificates, mixed content, or outdated protocols can disrupt user experience and negatively impact SEO.

Steps to Fix the Issue:

- Check SSL Certificate Validity:

- Ensure your SSL/TLS certificate is valid, up-to-date, and installed correctly.

- Use tools like SSL Labs to test the certificate for configuration issues.

- Identify and Resolve Mixed Content:

- Scan your website for HTTP resources (e.g., images, scripts) loaded on HTTPS pages.

- Update resource URLs to HTTPS or replace them with secure alternatives.

- Redirect HTTP to HTTPS:

- Set up 301 redirects to ensure all HTTP URLs automatically redirect to their HTTPS counterparts.

- Update the sitemap and internal links to use HTTPS URLs.

- Enable HSTS (HTTP Strict Transport Security):

- Configure HSTS to enforce HTTPS connections for all site visits.

- Add the Strict-Transport-Security header in your server configuration.

- Verify in Google Search Console:

- Add and verify both HTTP and HTTPS properties in GSC to monitor issues.

- Submit the HTTPS sitemap in the Sitemaps section.

- Update External Links:

- Reach out to external websites linking to your site and request updates to HTTPS URLs.

- Use canonical tags to consolidate ranking signals for HTTPS versions.

- Monitor Security Issues:

- Regularly audit the Security Issues report in GSC for warnings.

- Address vulnerabilities promptly to maintain a secure website.

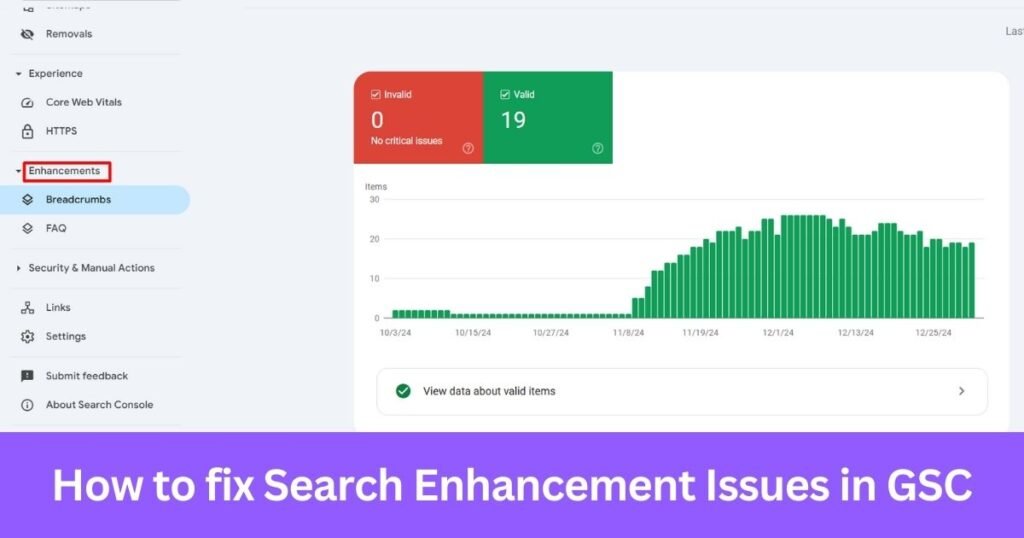

How to fix Search Enhancement issues in GSC?

Search enhancement issues in Google Search Console refer to problems with structured data or advanced features like breadcrumbs, sitelinks search box, or product markup that enhance the appearance of your website in search results. These enhancements improve the visibility and click-through rate of a webpage but can lead to errors if improperly implemented.

Steps to Fix the Issue:

- Review the Enhancements Report:

- Navigate to the Enhancements section in Google Search Console.

- Identify errors related to specific enhancements like breadcrumbs, AMP, or structured data.

- Validate Structured Data:

- Use Google’s Rich Results Test to validate the structured data implementation.

- Ensure the JSON-LD, Microdata, or RDFa markup is correctly formatted and adheres to Google’s guidelines.

- Fix Markup Errors:

- Correct issues flagged in the Enhancements report, such as missing required fields or invalid property values.

- Update your site’s HTML or CMS settings to resolve these errors.

- Test Updated Pages:

- After making corrections, use the Rich Results Test or URL Inspection Tool to verify the updates.

- Ensure the structured data produces the intended rich results.

- Resubmit Affected URLs:

- Use the URL Inspection Tool to request reindexing of pages with fixed enhancements.

- Monitor the Enhancements report for validation results.

- Follow Google’s Guidelines:

- Regularly consult Google’s Search Central documentation for best practices on structured data.

- Avoid spammy or irrelevant structured data to prevent manual penalties.

- Monitor for New Errors:

- Continuously review the Enhancements report in Google Search Console to identify and address emerging issues promptly.

- Perform routine audits to maintain compliance and improve search visibility.

How to Fix Security Issues and Manual Actions in GSC?

Security and manual action errors in Google Search Console refer to warnings and penalties that affect the safety and compliance of your website. Security issues such as malware, phishing, and hacked content can compromise user trust, while manual actions are penalties imposed by Google for violations of their webmaster guidelines.

Security Issues

Security issues in Google Search Console refer to threats identified on your website that can compromise user safety and trust. These may include malware, phishing attacks, or hacked content that exposes visitors to potential harm. Google flags these issues to protect users and maintain the integrity of search results.

Hacked Content

Hacked content refers to unauthorised changes made to your website by attackers. These changes may include malicious code injections, phishing pages, or spam content designed to harm users or manipulate search engine rankings. Hacked content can damage your site’s reputation, reduce user trust, and lead to penalties from Google.

Steps to Fix the Issue:

- Identify Hacked Pages:

- Review the Security Issues section in Google Search Console to locate affected pages.

- Use external tools like Sucuri or Google’s Safe Browsing to perform a comprehensive site scan.

- Quarantine Your Website:

- Temporarily take the site offline to prevent further damage or user harm.

- Notify your hosting provider about the security breach for assistance.

- Remove Malicious Code:

- Access your site’s files via FTP or your hosting control panel.

- Manually remove suspicious scripts, files, or changes introduced by hackers.

- Restore from Backup:

- If available, restore your website to a clean state from a secure backup.

- Ensure the backup does not contain vulnerabilities that led to the hack.

- Update and Secure:

- Update your CMS, plugins, themes, and server software to their latest versions.

- Implement strong passwords and enable two-factor authentication for all accounts.

- Request a Review in GSC:

- Submit a reconsideration request in Google Search Console after resolving the issue.

- Provide detailed information on the actions taken to secure your site.

- Monitor Regularly:

- Set up monitoring tools to detect future hacks promptly.

- Schedule regular security audits to identify vulnerabilities.

Malware

Malware refers to malicious software injected into your website by attackers, aiming to harm users, steal data, or compromise your site’s functionality. This issue can lead to Google flagging your site as unsafe, damaging user trust and search rankings.

Steps to Fix the Issue:

- Identify the Malware:

- Use the Security Issues section in Google Search Console to locate affected areas.

- Perform a comprehensive scan using tools like Sucuri, Wordfence, or Google Safe Browsing.

- Isolate the Threat:

- Temporarily take the site offline to prevent further spread of malware.

- Inform your hosting provider about the issue for support.

- Remove Malicious Files:

- Access your website files via FTP or hosting control panel.

- Delete suspicious scripts, plugins, or themes injected by attackers.

- Restore from a Clean Backup:

- Restore your site to a previous state using a secure backup.

- Ensure the backup is malware-free and up-to-date.

- Update and Patch Software:

- Update all CMS, plugins, and themes to their latest versions.

- Apply any available security patches to close vulnerabilities.

- Reinforce Security Measures:

- Implement firewalls and security plugins to prevent future attacks.

- Use strong passwords and enable two-factor authentication for all accounts.

- Request a Review:

- Submit a security review request in Google Search Console after resolving the issue.

- Provide detailed documentation of the steps taken to secure the site.

- Monitor Regularly:

- Continuously monitor your site for vulnerabilities or suspicious activity.

- Schedule periodic security audits to maintain a robust defence.

Phishing

Phishing refers to fraudulent attempts to deceive users into providing sensitive information, such as passwords or credit card details, by creating fake web pages or forms. When Google detects phishing on your website, it can flag the site as unsafe, significantly harming user trust and search rankings.

Steps to Fix the Issue:

- Identify Phishing Pages:

- Use the Security Issues section in Google Search Console to locate affected URLs.

- Perform a manual review to confirm the presence of phishing content.

- Take the Site Offline (if necessary):

- Temporarily disable the site to prevent further exploitation and harm to users.

- Notify your hosting provider for additional support.

- Remove Phishing Content:

- Delete malicious files or scripts injected into your website.

- Remove any unauthorized changes to forms or pages.

- Restore from a Secure Backup:

- Revert your site to a clean version using a secure, recent backup.

- Ensure that the backup is free of phishing or malicious content.

- Update Security Measures:

- Update your CMS, plugins, and themes to their latest versions.

- Implement firewalls, malware scanners, and two-factor authentication for better security.

- Request a Review in GSC:

- Submit a reconsideration request in Google Search Console after resolving the issue.

- Provide details of the actions taken to secure your website.

- Monitor for Recurrence:

- Set up monitoring tools to detect phishing attempts promptly.

- Regularly review site activity and conduct security audits to ensure ongoing safety.

Uncommon Downloads

Uncommon Downloads refers to files hosted on your website that are flagged by Google as potentially harmful or suspicious due to low download rates or lack of reputation. These warnings can deter users from downloading files and harm your site’s credibility.

Steps to Fix the Issue:

- Identify Uncommon Downloads:

- Review the Security Issues section in Google Search Console to locate flagged files.

- Check server logs or hosting dashboards to identify the source and nature of these files.

- Verify File Integrity:

- Ensure the files flagged as uncommon are legitimate and not compromised by malware.

- Scan the files using tools like VirusTotal to confirm they are safe.

- Provide Accurate File Metadata:

- Include clear and descriptive names, descriptions, and digital signatures for all downloadable files.

- Ensure the file metadata reflects its purpose and origin.

- Use Secure Hosting:

- Host files on secure and reputable servers to avoid suspicion.

- Ensure HTTPS is enabled for all file download links.

- Communicate with Google:

- If the files are legitimate and safe, submit a review request in Google Search Console.

- Provide evidence of the file’s integrity and security in your request.

- Monitor Download Activity:

- Regularly review download activity and user feedback to identify any future concerns.

- Remove outdated or unnecessary files to minimise risks.

Manual Actions

Manual Actions in Google Search Console refer to penalties applied by Google’s team when a website violates its Webmaster Guidelines. These actions can result in reduced rankings or complete removal from search results. Common reasons include spammy structured data, unnatural links, cloaking, or thin content.

Steps to Fix the Issue:

- Identify the Manual Action:

- Navigate to the Manual Actions section in Google Search Console.

- Review the specific violation flagged by Google.

- Understand the Violation:

- Refer to Google’s Webmaster Guidelines to understand why the manual action was applied.

- Identify the content, links, or practices causing the issue.

- Address the Violation:

- Remove or disavow spammy backlinks if flagged for unnatural links.

- Correct structured data to comply with Google’s guidelines.

- Replace thin or low-quality content with valuable, original material.

- Document Fixes:

- Maintain a detailed log of the actions taken to resolve the violation.

- Gather evidence, such as screenshots or reports, to support your reconsideration request.

- Submit a Reconsideration Request:

- Use the Manual Actions section in GSC to submit a request.

- Provide a clear explanation of the issue, actions taken, and evidence of compliance.

- Monitor Results:

- Continuously review the Manual Actions section to ensure the penalty is lifted.

- Conduct regular audits to prevent future violations.

Spammy Structured Data

Spammy Structured Data refers to the misuse of structured data markup, often involving irrelevant, misleading, or manipulative content. Google flags such issues when the markup does not adhere to its structured data guidelines, which can lead to manual actions or penalties affecting the visibility of rich results in search.

Steps to Fix the Issue:

- Identify Affected Pages:

- Navigate to the Manual Actions section in Google Search Console to locate pages flagged for spammy structured data.

- Use Google’s Rich Results Test to identify specific markup issues.

- Review Structured Data Markup:

- Ensure all structured data complies with Google’s guidelines.

- Avoid including fake reviews, irrelevant information, or deceptive content in the markup.

- Correct or Remove Faulty Markup:

- Fix errors such as missing required fields, invalid properties, or duplicated schema.

- Remove structured data entirely from pages where it is unnecessary or inapplicable.

- Validate Fixes:

- Use Google’s Rich Results Test to confirm that the updated structured data is error-free.

- Ensure the markup accurately represents the page’s content.

- Request a Reconsideration:

- After correcting the issues, submit a reconsideration request in Google Search Console.

- Provide a detailed explanation of the fixes implemented to resolve the problem.

- Monitor for Recurrence:

- Regularly review the Enhancements and Manual Actions reports in GSC to catch new issues early.

- Conduct periodic audits of structured data to ensure ongoing compliance.

Unnatural Links

Unnatural Links refer to links that are artificially created to manipulate a website’s ranking in search engine results. These may include paid links, excessive reciprocal links, or links from low-quality or irrelevant websites. Google identifies such practices as violations of its Webmaster Guidelines and may impose penalties that negatively impact your site’s visibility.

Steps to Fix the Issue:

- Identify Unnatural Links:

- Use tools like Google Search Console’s Links report or third-party platforms (e.g., Ahrefs, SEMrush) to analyse your backlink profile.

- Look for links from spammy, low-quality, or irrelevant domains.

- Remove Harmful Links:

- Contact webmasters of linking sites and request the removal of unnatural or harmful backlinks.

- Document your outreach efforts for transparency in your reconsideration request.

- Disavow Remaining Links:

- Use Google’s Disavow Tool to submit a list of links or domains you cannot remove manually.

- Ensure the disavow file is formatted correctly and includes only problematic links.

- Address Internal Linking Practices:

- Review your internal linking strategy to ensure it follows best practices and does not appear manipulative.

- Avoid keyword stuffing in anchor text.

- Create High-Quality Content:

- Focus on building organic, high-quality backlinks by producing valuable, shareable content.

- Engage in ethical link-building practices that align with Google’s guidelines.

- Request a Reconsideration:

- If a manual penalty has been applied, submit a reconsideration request via Google Search Console.

- Provide a detailed explanation of the actions taken to resolve the issue and prevent future violations.

- Monitor for New Issues:

- Regularly audit your backlink profile to identify and address new unnatural links promptly.

- Use tools like Google Alerts to track mentions of your website and assess linking quality.

Cloaking or Sneaky Redirects

Cloaking or Sneaky Redirects refer to deceptive practices where the content presented to search engine bots differs from what users see, or when users are redirected to unexpected pages. These practices violate Google’s Webmaster Guidelines and can result in manual penalties or removal from search results.

Steps to Fix the Issue:

- Identify Cloaking or Redirects:

- Use the Manual Actions section in Google Search Console to locate flagged URLs.

- Manually review your site to identify discrepancies between user-facing content and what search engines see.

- Review Server-Side Configurations:

- Check .htaccess files, JavaScript, or PHP scripts for redirect rules.

- Ensure all redirects serve legitimate purposes and align with user expectations.

- Remove Deceptive Practices:

- Eliminate scripts or code that alter content based on user-agent detection.

- Avoid using redirects that send users to unrelated or harmful pages.

- Ensure Consistent Content:

- Serve the same content to users and search engines.

- Use Google’s Fetch as Google tool to verify that the bot sees the same page as users.

- Test and Validate Redirects:

- Use tools like Screaming Frog or HTTP Header Checker to analyse all redirects.

- Ensure redirects are permanent (301) and point to relevant, intended pages.

- Request a Reconsideration:

- Submit a reconsideration request in Google Search Console after fixing cloaking or redirect issues.

- Provide evidence of the corrective measures taken to ensure compliance.

- Monitor for Recurrence:

- Conduct periodic audits to detect and prevent future cloaking or sneaky redirects.

- Educate team members and developers about Google’s guidelines to avoid unintentional violations.

Thin or Low-Quality Content

Thin or Low-Quality Content refers to web pages with insufficient information, minimal value, or content that fails to meet user intent. These pages often result in poor user engagement and can lead to manual actions by Google, reducing the site’s visibility in search results.

Steps to Fix the Issue:

- Identify Thin Content:

- Use tools like Google Search Console, Screaming Frog, or SEMrush to identify pages with low word count or high bounce rates.

- Analyse user behaviour metrics to locate pages that fail to engage visitors.

- Enhance Content Quality:

- Expand thin content by adding valuable, relevant information that addresses user intent.

- Include supporting elements such as images, videos, and infographics to enrich the page.

- Remove Duplicate Content:

- Use tools like Copyscape or Siteliner to identify duplicate content across your website.

- Consolidate similar pages or add canonical tags to avoid duplication penalties.

- Focus on E-A-T (Expertise, Authoritativeness, Trustworthiness):

- Ensure content is well-researched and factually accurate.

- Include author bylines, credentials, and references to establish authority and trust.

- Optimize for User Intent:

- Align content with search intent by addressing common queries and offering solutions.

- Use headers, bullet points, and clear formatting for better readability.

- Monitor Content Performance:

- Track the performance of updated pages using analytics tools.

- Continuously review and improve underperforming content.

- Request a Reconsideration:

- If your site has been penalised for thin content, submit a reconsideration request in Google Search Console.

- Provide a detailed explanation of the improvements made to comply with guidelines.

Hacked Content

Hacked Content refers to malicious alterations made to your website by attackers, often including unauthorised scripts, phishing pages, or spam that compromise the site’s security and reputation. This issue can significantly harm user trust and lead to penalties from Google.

Steps to Fix the Issue:

- Identify Hacked Content:

- Use Google Search Console’s Security Issues section to locate compromised pages.

- Conduct a full site scan with security tools like Sucuri or Wordfence to detect hidden malware or unauthorised changes.

- Isolate the Problem:

- Temporarily take your site offline to prevent further harm to users or your site’s data.

- Inform your hosting provider to assist in isolating the issue.

- Remove Malicious Code:

- Manually delete infected files or code snippets from your site’s backend.

- Replace altered scripts or pages with clean, backup versions.

- Restore From a Clean Backup:

- If available, revert your site to a previously secured state using a trusted backup.

- Ensure the backup is not compromised or outdated.

- Secure Your Site:

- Update all CMS, plugins, and themes to their latest versions.

- Strengthen security by implementing firewalls, enabling two-factor authentication, and using strong passwords.

- Request a Security Review:

- After cleaning up the hack, submit a reconsideration request in Google Search Console.

- Include a detailed explanation of the steps taken to secure the site.

- Monitor and Prevent Future Hacks:

- Use tools like Google’s Safe Browsing to monitor your site for new threats.

- Schedule regular security audits to ensure ongoing protection.

User-Generated Spam

User-generated spam refers to spammy or irrelevant content added by users to a website, such as in forums, comment sections, or user profiles. This type of spam can harm your site’s reputation, lead to manual actions from Google, and degrade the overall user experience.

Steps to Fix the Issue:

- Identify Problematic Content:

- Use Google Search Console’s Manual Actions section to locate pages flagged for user-generated spam.

- Manually review user-contributed content, focusing on comment sections, forums, and user profiles.

- Moderate Content Proactively:

- Enable content moderation to review and approve user submissions before they go live.

- Use anti-spam plugins or tools like Akismet to automatically filter out spammy content.

- Clean Existing Spam:

- Remove irrelevant or harmful user-generated content from your site.

- Delete spammy user accounts or block offending IP addresses to prevent further issues.

- Implement CAPTCHA:

- Add CAPTCHA verification for user registrations and comments to reduce automated spam submissions.

- Educate Your Users:

- Set clear guidelines for acceptable content and behaviour on your platform.

- Display these guidelines prominently to encourage compliance.

- Secure Your Website:

- Update your CMS, plugins, and themes to their latest versions to patch vulnerabilities.

- Use web application firewalls to protect against spam bots and other malicious activities.

- Request a Reconsideration:

- If a manual action has been applied, submit a reconsideration request in Google Search Console after resolving the issues.

- Provide evidence of the steps taken to address user-generated spam.

- Monitor and Maintain:

- Regularly review user-generated content to identify and address spam promptly.

- Use analytics tools to monitor traffic patterns and detect suspicious activities.

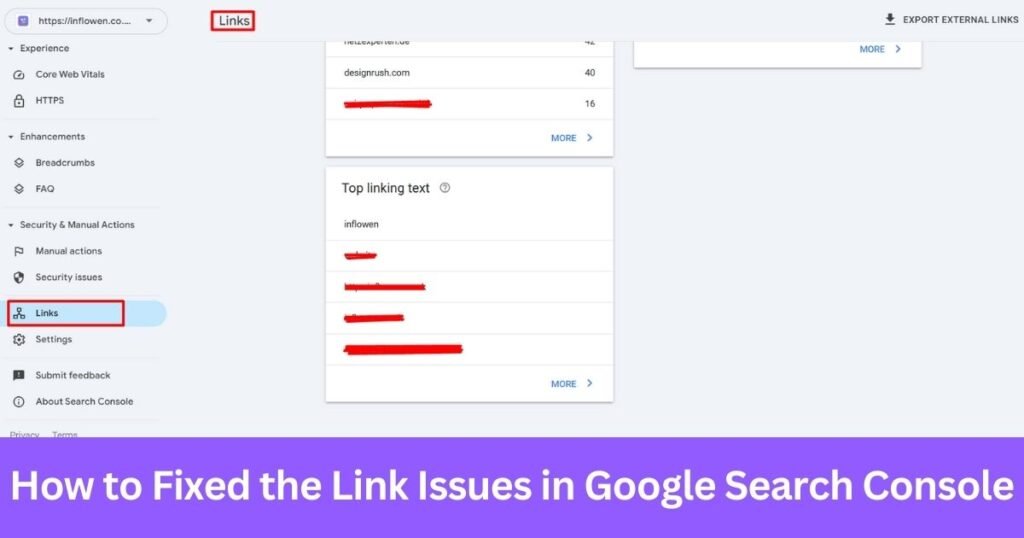

How to Fix the Link Issues in Google Search Console?

Link issues in Google Search Console refer to problems related to internal or external links that can impact your site’s usability, authority, and SEO performance. These issues may include broken links, irrelevant backlinks, or improper linking practices that hinder search engine crawling or user experience.

Disavow Links

Disavow links refer to the process of informing Google to ignore certain backlinks pointing to your site. This is necessary when your website has spammy, irrelevant, or harmful backlinks that could negatively impact its search rankings. Using the Disavow Tool allows you to protect your site from penalties related to low-quality links.

Steps to Fix the Issue:

- Identify Harmful Backlinks:

- Use tools like Google Search Console’s Links report, Ahrefs, or SEMrush to review your backlink profile.

- Look for links from spammy domains, irrelevant websites, or those with manipulative anchor text.

- Attempt to Remove Links Manually:

- Reach out to the webmasters of linking domains and politely request the removal of harmful backlinks.

- Document your outreach efforts for transparency in your disavow process.

- Prepare a Disavow File:

- Create a plain text file (.txt) listing the URLs or domains you want to disavow.

- Submit the Disavow File:

- Access Google’s Disavow Tool and upload your prepared file.

- Double-check the file for accuracy before submission to avoid disavowing useful links.

- Monitor the Impact:

- Track your backlink profile and rankings over time to assess the impact of the disavow process.

- Ensure no new harmful links are added to your profile.

- Maintain a Clean Backlink Profile:

- Regularly audit your backlinks to identify and address new harmful links.

- Focus on acquiring high-quality, relevant backlinks through ethical link-building practices.

What are the best practices to prevent Google Search Console Errors?

The best practices to prevent Google Search Console errors are listed below.

- Regularly Update Sitemaps:

- Ensure your sitemap reflects the latest structure of your website.

- Submit the updated sitemap to Google Search Console for efficient crawling.

- Maintain Clean URL Structures:

- Use descriptive and user-friendly URLs.

- Avoid using unnecessary parameters or long, complex strings in URLs.

- Optimise Internal Linking:

- Create a logical internal linking strategy to guide users and search engines.

- Ensure that links are functional and relevant to the content.

- Implement Schema Markup:

- Use structured data to enhance search result visibility.

- Validate schema markup with tools like Google’s Rich Results Test to ensure compliance.

- Focus on Website Speed:

- Compress images, enable browser caching, and minimise JavaScript.

- Use tools like Google PageSpeed Insights to identify and fix performance bottlenecks.

- Ensure Mobile Responsiveness:

- Test your website on various devices to ensure a seamless user experience.

- Use Google’s Mobile-Friendly Test to verify responsiveness.

- Regularly Audit for Errors:

- Perform periodic checks in Google Search Console to identify and resolve new issues promptly.

- Use additional tools to complement GSC for a thorough audit.

What are the Best Tools and Resources to Simplify GSC Issues Fixes?

The best tools and resources to simplify GSC issue fixes are listed below.

- Screaming Frog SEO Spider:

- Crawl your website to identify technical SEO issues, including broken links, duplicate content, and missing metadata.

- Integrate with GSC to enhance data accuracy and issue resolution.

- SEMrush:

- Conduct in-depth site audits to detect and fix errors related to crawlability, loading speed, and backlinks.

- Use the keyword tracking and backlink analysis features to complement GSC insights.

- Ahrefs:

- Analyse your backlink profile and identify harmful links that may need disavowing.

- Monitor keyword rankings and site health for additional optimisation.

- Google’s Help Center:

- Access detailed guides and tutorials on how to use Google Search Console effectively.

- Stay updated on new features and changes in Google’s algorithms.

- Webmaster Guidelines:

- Refer to Google’s official guidelines to ensure compliance and avoid penalties.

- Use this resource as a benchmark for creating and maintaining a healthy website.

- Rich Results Test:

- Validate structured data to enhance search result visibility.

- Ensure compatibility with Google’s requirements for rich snippets.

- PageSpeed Insights:

- Test your site’s loading speed and identify performance bottlenecks.

- Receive actionable recommendations for improving Core Web Vitals metrics.

- Google Analytics:

- Track user behaviour to identify pages with high bounce rates or low engagement.

- Combine insights with GSC data for a holistic view of site performance.

Why Fixing Google Search Console Errors Matters for SEO?

Fixing Google Search Console errors is essential for maintaining a well-optimised website. Errors in GSC can significantly impact crawlability, indexing, and overall site performance, directly influencing search rankings and user experience.

Impact of Errors on Crawlability and Indexing:

- Errors like 404s, server issues, or blocked resources can prevent Google from fully crawling your website.

- Pages that are not indexed cannot appear in search results, limiting your site’s visibility.

Importance for Core Web Vitals and Rankings:

- Resolving issues related to Core Web Vitals ensures better page speed, interactivity, and visual stability.

- Improved site performance contributes to higher rankings, as Google prioritises user-friendly websites.

SEO Benefits of Fixing GSC Errors:

- Enhanced user experience through faster load times and fewer interruptions.

- Increased trust and credibility by maintaining a secure and error-free site.

- Better keyword performance due to optimal crawling and indexing of content.

How Accurate is Google Search Console?

Google Search Console (GSC) is a highly reliable tool for monitoring and enhancing website performance. While it provides precise data on clicks, impressions, and search queries, occasional discrepancies in reporting can occur due to data sampling and delays.

GSC’s accuracy is robust for identifying technical issues like indexing errors and page performance. However, its metrics, such as average position or click-through rate, should be interpreted alongside other tools for a comprehensive understanding. Combining GSC with third-party analytics platforms can provide a more holistic view of site performance.

What Does Validate Fix Mean in Google Search Console?

Validate Fix in Google Search Console is a feature that checks whether reported issues, like indexing errors or security warnings, have been resolved. Once triggered, it initiates a re-crawl to confirm successful fixes.

This process helps webmasters ensure that errors are adequately addressed and updates are reflected in search results. Validation may take several days, depending on the issue’s complexity and crawl frequency. It’s a crucial step for maintaining website health and compliance with Google’s guidelines.

How Do I Check My Google Search Console Penalties?

To check for penalties in Google Search Console, navigate to the Manual Actions section under the Security & Manual Actions tab. This area highlights any penalties applied for guideline violations, like spammy links or cloaking.

If your site has a penalty, GSC provides details about the issue and guidance on resolving it. Once fixes are made, you can request a review for penalty removal. Regular monitoring of this section ensures you stay informed about your site’s compliance and performance.

How Often Should You Monitor Google Search Console?

Monitoring Google Search Console weekly ensures timely identification and resolution of errors like indexing issues, Core Web Vitals, or manual penalties. Regular checks help maintain your website’s health and optimise its performance in search results.

For high-traffic websites or ongoing campaigns, daily monitoring is recommended to catch critical issues early. Incorporating GSC reviews into monthly SEO audits provides a comprehensive overview, ensuring no long-term problems affect your site’s rankings or user experience.

How Long Does It Take for Fixes to Reflect in the Tool?

Fixes in Google Search Console typically reflect within a few days to several weeks, depending on the nature of the issue and Google’s crawl frequency. Errors like sitemap updates or minor fixes may update faster than complex problems.1

Validation for issues such as manual actions or Core Web Vitals can take longer, as Google thoroughly rechecks the affected pages. Regular monitoring and prompt revalidation requests can speed up the process, ensuring timely updates in the tool.

Can I Automate the Google Search Console Fixing Process?

While you cannot fully automate the Google Search Console fixing process, tools like Screaming Frog, SEMrush, and Ahrefs can automate error detection and provide actionable insights. Fixing issues like manual actions or Core Web Vitals still requires human intervention.

Automation can assist in identifying recurring problems, generating reports, and monitoring updates. Integrating APIs with custom scripts can streamline certain tasks, but regular manual reviews are essential to ensure that fixes align with Google’s guidelines and website goals.

Do I need an SEO agency to solve the GSC issues?

Yes, hiring an SEO agency can simplify resolving Google Search Console issues, especially for complex problems like manual actions, Core Web Vitals, or structured data errors. Their expertise ensures accurate fixes and long-term optimisation of your website.

While minor tasks, such as sitemap updates or resolving broken links, can be handled in-house, an agency like Inflowen provides tailored solutions for technical SEO challenges. Their comprehensive approach saves time, enhances site health, and maximises your website’s ranking potential.

What are the Best SEO Agencies to Solve the GSC Problems?